NICHOLAS DASS

Optimizing Warehouse Sustainability - Reinforcement Learning AMR

This project was developed as part of the Reinforcement Learning course in the Master of Management in Artificial Intelligence (MMAI) program. It demonstrates how Reinforcement Learning (RL) can enhance warehouse efficiency and sustainability by optimizing the navigation of Autonomous Mobile Robots (AMRs). Through path planning, the project aimed to reduce energy consumption, CO₂ emissions, and inefficiencies in warehouse logistics.

This project demonstrates how Reinforcement Learning (RL) can enhance warehouse efficiency and sustainability by optimizing the navigation of Autonomous Mobile Robots (AMRs). Through AI-driven path planning, the project aimed to reduce energy consumption, CO₂ emissions, and inefficiencies in warehouse logistics.

Project Goals

Train an AMR to navigate a warehouse grid efficiently.

Reduce training time and energy consumption through optimized reinforcement learning techniques.

Improve operational efficiency by minimizing unnecessary movements and reducing idle time.

Assess the environmental impact of AI-driven warehouse logistics.

Challenges & Solutions

Looping Behavior: The AMR occasionally entered repetitive cycles, oscillating between two locations after reaching shelves. Reward structure adjustments and penalties for repetitive movements mitigated this.

Training Efficiency: Initial training episodes took 52 minutes each due to visualization rendering. Switching to post-training visualization reduced training time per episode to a fraction of a second.

Before Optimization Video:

This video illustrates the AMR's erratic movements and inefficiencies during the initial training phase. It frequently collides with obstacles and struggles to reach shelves efficiently.

Balancing Exploration vs. Exploitation: The epsilon-greedy policy was used to ensure the AMR explored the warehouse sufficiently before settling on an optimal path.

Technical Approach

Algorithm Used: Q-learning-based reinforcement learning.

Warehouse Simulation Grid: The environment was represented as a 10x10 grid with obstacles and designated shelf locations.

Action Space: The AMR could move left, right, up, and down, mimicking real-world navigation constraints.

Reward Structure: The AMR was rewarded for reaching shelves efficiently and penalized for collisions or redundant movements.

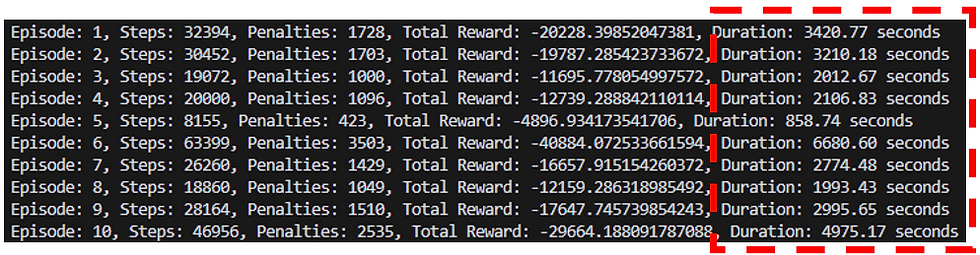

Performance Metrics Before Optimization:

Key Sustainability Impact

Impact Area | Before Optimization | After Optimization | Improvement |

|---|---|---|---|

Training Time per Episode | 52 minutes | < 1 second | 99.9% reduction |

Energy Consumption (1000 episodes) | 435 kWh | Negligible | 435 kWh saved |

CO₂ Emissions Reduction (Training) | 19.9 kg CO₂ | Minimal | 19.9 kg CO₂ reduction |

Operational Energy Use | 100 watts/hr | 70 watts/hr | 30% energy reduction |

Annual Energy Savings (Operation) | N/A | 109.5 kWh | 5 kg CO₂ reduction |

A table showing improved efficiency, reduced training time, and optimized navigation.

Performance Metrics After Optimization:

After Optimization Video:

The AMR now moves efficiently, avoids obstacles, and reaches the shelves quickly, demonstrating the effectiveness of reinforcement learning.

Scalability & Broader Applications

The methodologies developed in this project can be applied to:

Supply Chain Optimization: AI-driven logistics planning for warehouses and fulfillment centers.

Autonomous Vehicles: Path optimization for self-driving delivery robots.

Smart Cities: AI urban traffic management to reduce congestion and fuel consumption.

Agriculture: Optimizing autonomous farming equipment to reduce energy use and soil disruption.

Airports: Enhancing baggage disembarkation processes using autonomous robots.

Snow Removal Optimization: AI-driven route planning for efficient snow plowing in metropolitan areas.

Hospital Logistics: Automated delivery triage for medications, food, and essential supplies.

Hospitality Services: AI-powered hotel room service and housekeeping deliveries.

Parking Optimization: Smart navigation for parking in metropolitan areas to reduce congestion and fuel consumption.

Conclusion

This project showcases how Reinforcement Learning can contribute to sustainability and efficiency in warehouse operations. AMRs can be crucial in greener supply chains and AI-driven sustainability solutions by reducing energy use, optimizing training methodologies, and ensuring streamlined navigation. Future enhancements will include real-world validation, scalability improvements, and integration with renewable energy sources.